Your Guardian Agent in the Era of Autonomous AI – Part 2 : Aligning User Intent with Your Agents

In Part 1, we introduced the Guardian Agent – a protective layer that oversees and secures AI agents as they operate autonomously. In this follow-up, we focus on event types and why each requires different considerations for a Guardian Agent.

What Are Event Types?

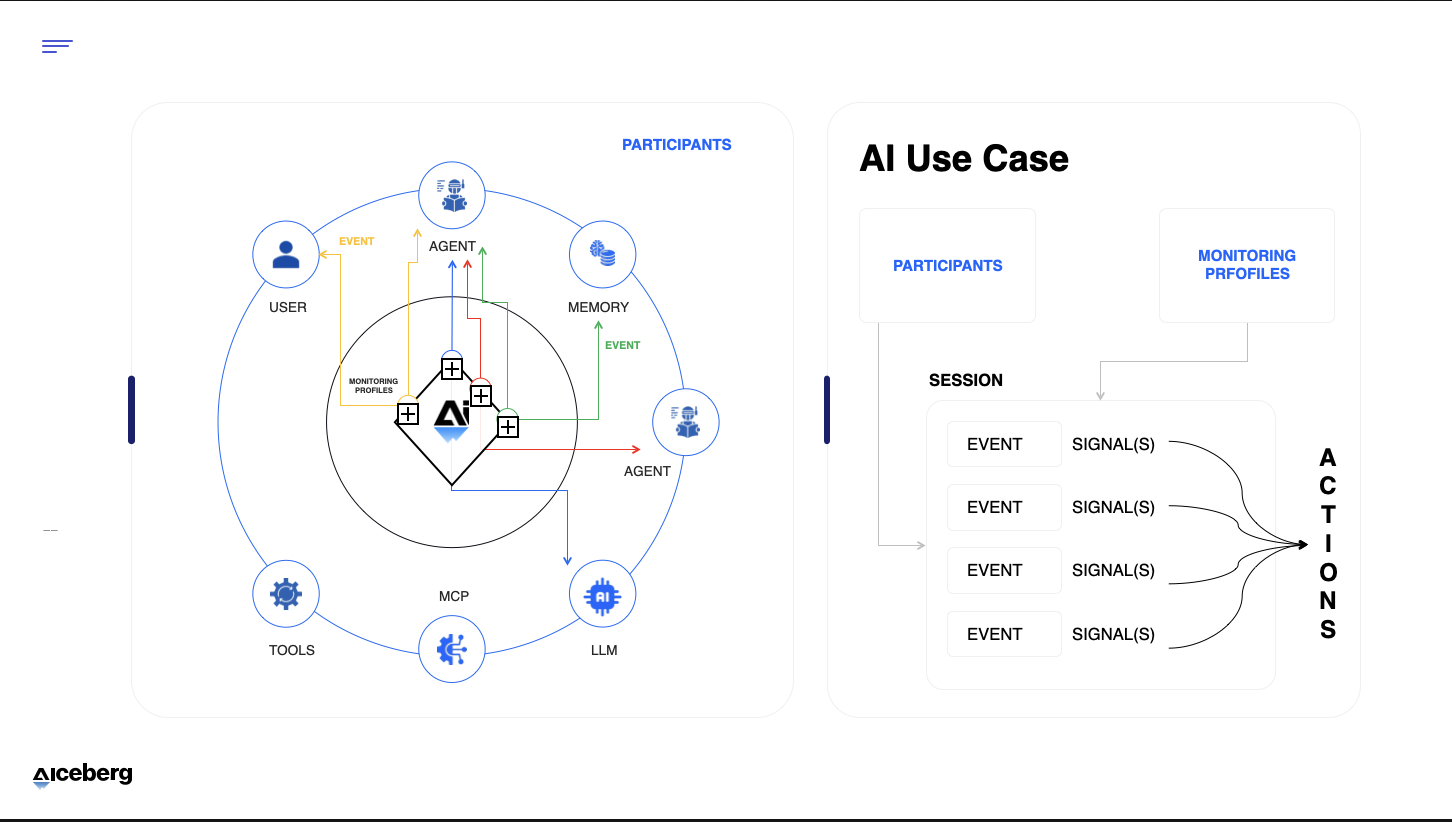

Event types are the communications between participants in an agentic flow. A participant is anything that plays a role in the flow, such as:

- A user providing input

- An agent orchestrating tasks

- A tool performing actions

- Memory storing or retrieving knowledge

A model used for natural language reasoning

Each event type captures both the request and response between participants. By classifying these interactions, we can see where risks emerge and where a Guardian Agent must intervene.

The Five Core Event Types

- U2M (User → Machine): Human input to the agent.

- A2MO (Agent → Model): The agent sending prompts to its underlying model.

- MEM-ACCS (Memory Access): An agent accessing memory stores.

- T-INVK (Tool Invocation): The agent calling an external tool for example MCP or API.

- A2A (Agent → Agent): Communication between two or more agents.

General Considerations for All Event Types

Guardian Agents always need to check for adversarial attacks, toxic content, sensitive data exposure and policy enforcement across all event types. These are the baseline protections. However, each event type introduces its own unique considerations that go beyond these common concerns.

In the sections that follow, we’ll go in depth on each event type, focusing specifically on these unique considerations rather than repeating the shared ones.

1. U2M (User → Machine)

What it is: Input ultimately triggered by a user, whether typed directly or passed indirectly (e.g., batch process, service ticket). In agentic flows this goes to agents; in simpler AI apps, it still applies—hence machine.

Unique Considerations:

- It is the first trigger of an agentic flow, carrying the user’s intent and purpose that all subsequent events must align with.

- The final response to the user is the last line of defense, where sensitive data, unexpected intent, or unapproved decisions may surface.

- It is the most vulnerable entry point, with limited programmatic control and an open, easy-to-use interface that attackers are most likely to target.

2. A2MO (Agent → Model)

What it is: The agent querying its underlying LLM or model for intelligence to plan and decide actions aligned with the user’s intent.

Unique Considerations:

- As the brain of the agent, this is where prompt injection and jailbreaks can derail everything.

- Bias and hallucinations often surface here, and without a strong source of truth they’re hard to detect.

- The agent’s plan must align with the user’s original intent to avoid misdirection.

3. MEM-ACCS (Memory Access)

What it is: An agent accessing memory for short- and long-term storage tied to users and sessions, so context can be preserved and applied to fulfill the user’s intent.

Unique Considerations:

- Session separation is harder than traditional access controls, making memory a prime target for tampering that may impact only a single user and evade detection.

- Only relevant data should be pulled to support the user’s intent, avoiding arbitrary context that could mislead the agent’s execution plan.

4. T-INVK (Tool Invocation)

What it is: When an agent calls an external tool, API, or MCP server to take action — updating calendars, sending payments, or responding to emails. This is when the agent moves beyond reading to writing and executing outcomes in the real world.

Unique Considerations:

- This is where the rubber meets the road: the true value of agents comes from taking autonomous actions, but also where the greatest risks lie.

- Actions and tools executed must align with the user’s intent and the plan the agent has created.

5. A2A (Agent → Agent)

What it is: Communication between agents for delegation, collaboration, or coordination. Unlike T-INVK, which executes predefined tools, A2A involves agents deciding how and when to use tools for broader requests. Specialized agents improve quality and limit exposure compared to a single all-purpose agent.

Unique Considerations:

- Cascading errors and feedback loops can spread quickly and drift from user intent.

- User intent must remain central, even when actions are delegated to other agents.

- Specialized agents reduce risk by limiting scope and blast radius.

Ensuring Alignment of User Intent Across Event Types

To maintain trust and safety, Guardian Agents must ensure that user intent is preserved consistently across all event types:

- U2M: Capture intent accurately at the start and guard against manipulation.

- A2MO: Ensure reasoning and plans created by the model stay aligned with user intent.

- MEM-ACCS: Retrieve and store only context that reinforces user goals, not noise.

- T-INVK: Confirm that real-world actions match the user’s purpose and the agent’s plan.

- A2A: Prevent drift when delegating tasks, ensuring the user’s original intent drives all agents.

Maintaining this alignment ensures that every step of the agentic flow remains trustworthy, safe, and purpose-driven.

Conclusion

As autonomous AI systems become more capable, the challenge lies not in their ability to act but in ensuring those actions remain aligned with user intent. Guardian Agents address this by providing oversight across all five event types—U2M, A2MO, MEM-ACCS, T-INVK, and A2A—each with its own vulnerabilities and safeguards. By continuously monitoring and enforcing alignment, Guardian Agents preserve trust, minimize risks, and create a secure foundation for meaningful human-AI collaboration. Ultimately, the value of autonomy in AI is realized only when it remains safe, transparent, and firmly rooted in the user’s goals.

See Aiceberg In Action

Book My Demo